Generative AI-assisted clinical interviewing of mental health

Design

This study builds upon data collected in9, where participants with or without mental health diagnoses completed standardized rating scales dedicated to measure specific mental disorders9. developed and collected open-response questions from the participants about their symptoms and related experiences. However, those responses were not used in this study. Participants who voluntarily re-enrolled from the prior study subsequently engaged in a clinical interview conducted via chat with an AI assistant for mental health assessment and diagnostic evaluation. Following the interview, participants rated their experience of the AI-powered assessment. Separately, another AI assistant analyzed the interview data to provide a summary, including the most likely diagnosis and justifications, which participants did not access.

Participants

Participants for the current study were recruited through Prolific, an online research platform, as part of9 and included 550 participants, where 450 individuals have self-reported, clinician-diagnosed mental health conditions and 100 healthy controls. Each diagnostic group included 50 participants, encompassing major depressive disorder (MDD), generalized anxiety disorder (GAD), bipolar disorder (BD), obsessive-compulsive disorder (OCD), attention-deficit/hyperactivity disorder (ADHD/ADD), autism spectrum disorder (ASD), eating disorders (ED), substance use disorders (SUD), and post-traumatic stress disorder (PTSD). During the prescreening phase of the prior study, participants confirmed that they were diagnosed by a professional clinician and that the diagnosis is ongoing. Furthermore, they reported their treatment status and their diagnostic history. Participants were only included when they reported English as their first language.

For the current study, we recontacted participants. Resulting in a final sample size of 303 participants, comprising 248 individuals with mental health conditions and 55 healthy controls.

The final sample consisted of 170 female participants, 110 male, 20 non-binary, and 3 individuals who preferred not to disclose their gender identity, with a mean age of 40.0 years (SD = 12.1). Participants reported their highest education levels: high school (N = 122), undergraduate degree (N = 127), postgraduate degree (N = 45), or doctorate (N = 9). We explicitly included participants with comorbidities. This follows the rationale of ecological psychology, emphasizing the situational diversity of mental health as a dynamic interplay of psychological, emotional, environmental, and social factors. Participants were removed only when they failed at least one of the attention checks or provided nonsensical or low-quality text responses, which ensured a sufficient data quality.

Ethics

The study was performed in accordance with relevant guidelines. Participants provided once again informed consent, tailored to the current study, ensuring their understanding of its purpose, procedures, and voluntary nature, with anonymity and GDPR compliance emphasized. The study was approved by the Swedish Ethical Review Authority (Etikprövningsmyndigheten; registration number 2024-00378-02).

Measures

AI-powered interview

Participants completed an AI-conducted clinical interview using the TalkToAlba software platform (TalkToAlba.com; see Appendix B for sample dialog). The AI-powered interview can be used following permission from the first author. TalkToAlba is designed to support mental health professionals through various AI-assisted features, including an AI therapist delivering CBT, as well as tools for recording, transcribing, and analysing patient-clinician meetings and interactions. The TalkToAlba platform is currently used by clinicians across Sweden and other parts of Europe. For this study, participants accessed the interview via a secure web link and completed it in a web browser with internet access. They could choose to interact with the AI clinician either by typing and reading, or by speaking and listening. The AI system, powered by a LLM, responded to input within a few seconds, simulating a natural conversational pace.

The AI-powered interview was divided into three phases: a hypothesis phase, a validation phase, and a final assessment phase, the latter of which was not disclosed to participants. The full wording of the prompts used in each phase is provided in Appendix A.

The AI assistant was built using OpenAI’s GPT-4 architecture, specifically the “gpt-4-turbo-preview” configuration. No additional training to fine tune the model was applied, nor did we upload additional documents related to mental health assessments. The language model analyzed the full dialogue in real-time without automated annotations To keep consistency and reproducibility in responses, the model was initialized with a fixed seed value of 0 and a low temperature setting of 0.1; all other parameters followed OpenAI’s default configurations.

During the initial phase (i.e., hypothesis phase), the AI assistant engaged participants in a natural, conversational exchange aimed at exploring their mental health status. Through a series of open-ended questions, the AI assistant collected relevant information and formulated a preliminary hypothesis regarding the participant’s mental health condition. This hypothesis was grounded in the DSM-5 diagnostic framework and informed the next phase of the interview.

During the second phase (i.e., validation phase), the AI assistant conducted a structured, confirmatory clinical interview focused on validating the preliminary diagnosis. Drawing on DSM-5 criteria, the assistant assessed each diagnostic criterion one at a time, posing follow-up questions as needed to resolve uncertainties or ambiguities. This iterative questioning continued until all relevant criteria were addressed, and a clear and comprehensive diagnostic picture had emerged.

In the final and undisclosed phase (i.e., assessment phase), the AI assistant synthesized the information gathered to estimate the likelihood that the participant met criteria for each of the nine target mental health disorders. This assessment served as the AI-generated diagnostic output, which was later compared to standard rating scale results.

User experience evaluation

Following the interview, participants evaluated the AI assistant using both quantitative and qualitative measures. Using rating-scale questions, participants rated the AI on perceived empathy, relevance, understanding, and supportiveness. Participants also responded to open-ended questions, describing their experience in five descriptive words. Finally, participants indicated their preferences among different assessment modalities, comparing the AI-powered interview to traditional methods such as clinical-led interviews and traditional, standardized rating scales.

Rating scales of mental health disorders

Standardized rating scales were used to gather data on symptoms and to complement participants’ self-reported clinical diagnoses. These scales were completed by participants in Boehme et al. (in preparation) and used in this study to provide comparative data for AI assessments. For depression, we used the Patient Health Questionnaire-9 (PHQ-9)10, a nine-item tool using a 4-point Likert scale to measure depressive symptoms. Anxiety symptoms were assessed using the General Anxiety Disorder-7 Scale (GAD-7)11, which consists of seven items rated on a similar scale. Obsessive-Compulsive Disorder (OCD) was measured using the Brief Obsessive–Compulsive Scale (BOCS)12 ), consisting of 15 items rated on a 3-point Likert scale and one open-response item to categorize obsessions and compulsions. For bipolar disorder, the Mood Disorder Questionnaire (MDQ)13 was utilized, comprising 14 binary (Yes/No) items and an additional question rated on a 4-point Likert scale.

To screen for ADHD, Part A of the Adult ADHD Self-Report Scale (ASRS)14 was applied, consisting of six items rated on a 5-point Likert scale. Autism Spectrum Disorder (ASD) was assessed using the Ritvo Autism and Asperger Diagnostic Scale (RAADS-14)15, a 14-item tool using a 4-point Likert scale.

Eating disorders were assessed using the Eating Disorder Examination Questionnaire (EDE-QS)16, which comprises 12 items scored on a 4-point Likert scale. Substance abuse was measured using the Alcohol Use Disorder Identification Test (AUDIT)17, featuring eight questions on a 5-point Likert scale and two on a 3-point scale, and the Drug Abuse Screening Test (DUDIT)18, with nine 5-point Likert scale items and two 3-point items.

Finally, for Post-Traumatic Stress Disorder (PTSD), the National Stressful Events Survey PTSD Short Scale (NSESSS-PTSD)19 was implemented. This tool includes one open-text response for describing a traumatic event and nine items rated on a 5-point Likert scale.

Cut-off scores for binary categorization (i.e., presence vs. absence of a diagnosis) were based on established thresholds commonly reported in the literature: PHQ-9 ≥ 10 (depression), GAD-7 ≥ 10 (anxiety), BOCS ≥ 8 (obsessive-compulsive disorder), ASRS ≥ 10 (ADHD), NSE ≥ 14 (PTSD), RAADS ≥ 14 (autism spectrum), EDE ≥ 18 (eating disorder), DUDIT ≥ 25 (substance use), MDQ ≥ 7 (bipolar disorder).

Procedure

Participants provided written informed consent prior to participation, in accordance with approval from the Swedish Ethical Review Authority (Ref. No. 2024-00378-02). They then completed the AI-powered clinical interview, followed by a series of questions evaluating their experiences with the AI interaction during the AI-powered interview, their preferences regarding assessment methods, and self-reported demographic, and diagnostic information.

AI assistant

An AI assistant, based on OpenAI’s GPT-4 architecture (gpt-4-turbo-preview) was created for assessing participants’ responses from the AI-powered clinical interviews according to the DSM-5 diagnostic criteria. The assistant was instructed to estimate the likelihood that each participant met the DSM-5 diagnostic criteria for the nine targeted disorders: major depressive disorder (MDD), generalized anxiety disorder (GAD), obsessive-compulsive disorder (OCD), bipolar disorder (BD), attention-deficit/hyperactivity disorder (ADHD/ADD), autism spectrum disorder (ASD), eating disorders (ED), substance use disorder (SUD), and post-traumatic stress disorder (PTSD). This AI-generated diagnostic measure is hereafter referred to as GPT. The exact wording of the prompt is provided in Appendix A. For binary classification purposes, a cut-off score of ≥ 50% likelihood was used to indicate presence of a diagnosis. That is, if the AI assistant estimated a probability of 50% or higher that the participant met the DSM-5 criteria for a given disorder, it was classified as present. This cut-off was chosen to reflect a neutral decision boundary, where the AI was at least as confident in the presence of the disorder as in its absence, aligning with conventional practices in probabilistic classification.

Statistics

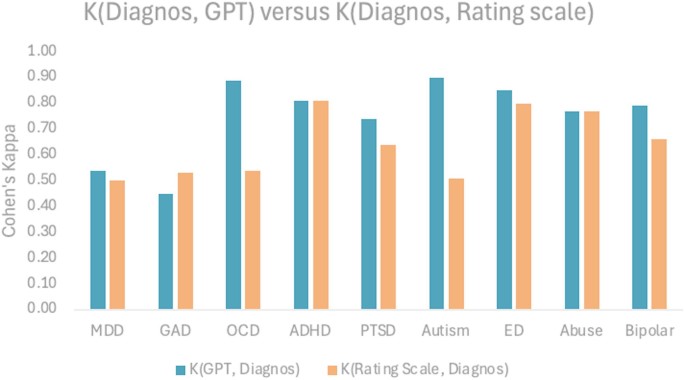

Diagnostic assessments were validated against participants’ self-reported clinical diagnosis, which were based on prior assessments made by their treating clinicians (referred to as Diag.), using binary classification (Table 1; Fig. 1). Agreement between this reference outcome and the AI-generated diagnosis (GPT) as well as the standarized rating scales (RS) was evaluated using Cohen’s Kappa, which measures classification agreement beyond chance. In addition, t-tests were used to evaluate whether the proportion of agreement with self-report diagnosis (Diag.) differed between the GPT and RS.

link